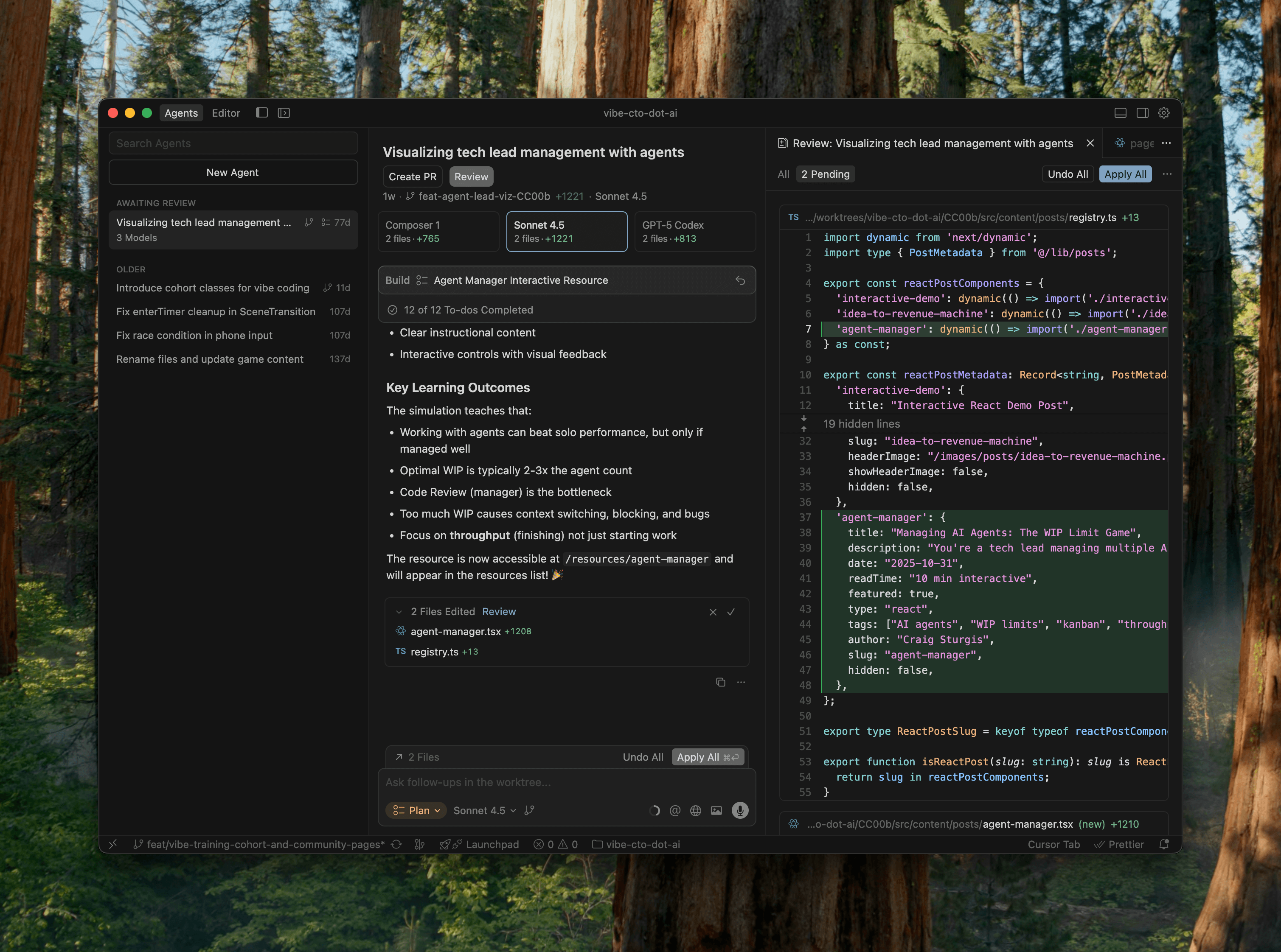

$200+ Multi-Agent Workflows: Cursor 2.0 vs. the AI Lab CLIs

A comprehensive comparison of multi-agent development workflows: Cursor 2.0 vs Claude Code, Codex CLI, and custom scripts for serious engineering work

Cursor 2.0 promises to make multi-agent workflows the default for every augmented engineer - people who use AI tools heavily to code but sweat all the details.

After a bakeoff, my verdict: Claude Code + my custom scripts still wins my $200/month slot, but I understand why others might choose differently.

TL;DR

-

Cursor 2.0's multi-agent workflow is much more accessible and very fast but still loses to finely tuned Claude Code + Codex CLI + custom scripts for me for serious work.

-

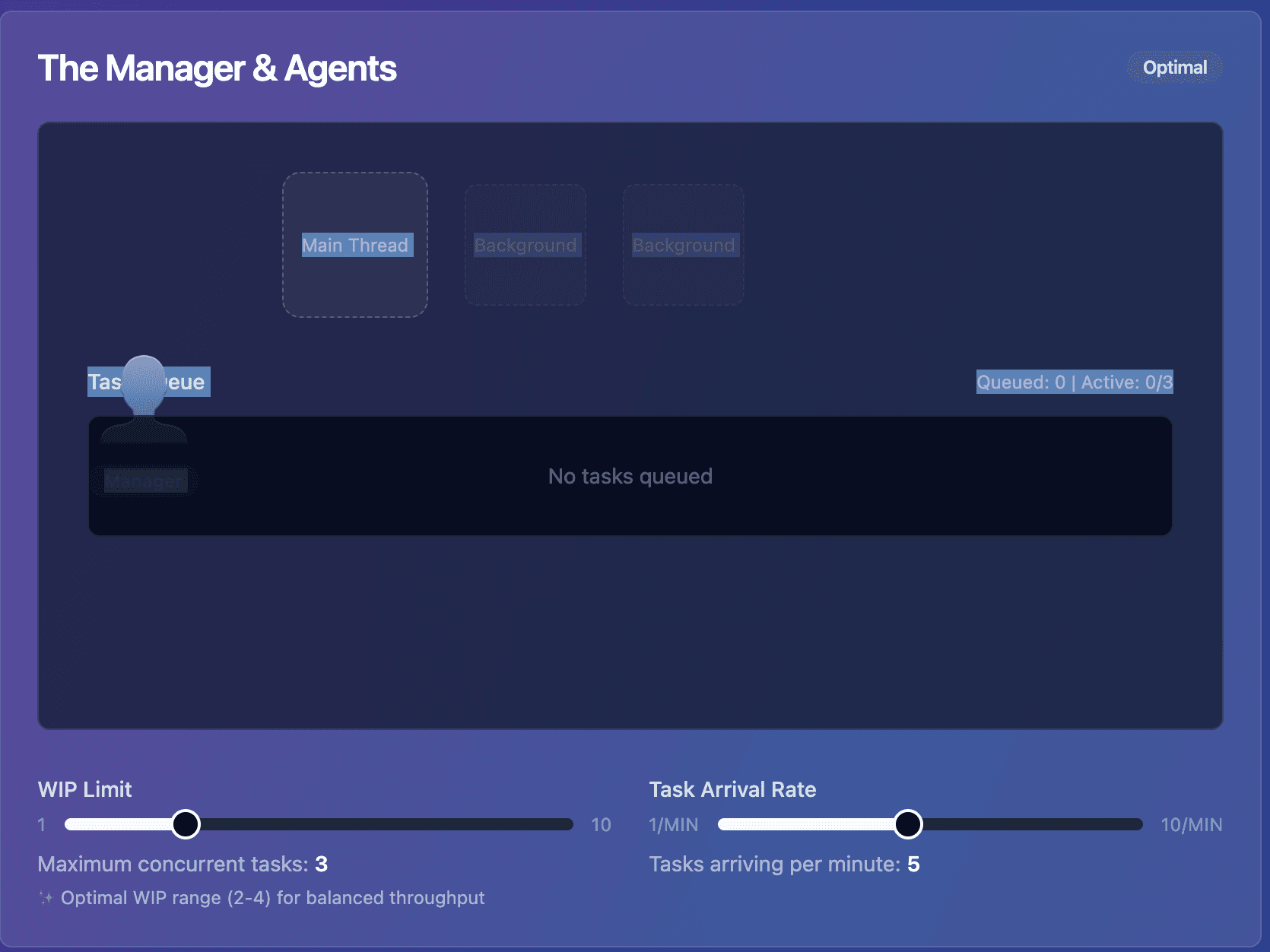

Key multi-agent workflow insight: WIP limits still really matter when managing AI agents.

The Problem

Having a well tuned, multi-agent development workflow is the most powerful way to do AI augmented engineering these days. One person can get an impressive amount done through a team of agents.

But, it's hard to manage well, has not been very accessible, and in my experience it's really hard to sustain over long stretches.

My Current Stack

- Claude Code Max Plan ($200/mo)

- Fast, powerful, a joy to use. I spend 90% of my engineering time with Claude.

- Codex CLI on ChatGPT Pro Plan ($200/mo)

- Slower, not as nice to use, but one shots problems Claude gets stuck on

- I don't want to budget for 2 at this rate, going to try next month on $20/mo and see if it still plays this role well.

- Warp terminal ($20/mo plan, but getting it for free for a year through Lenny's newsletter)

- Really nice to use and easy agent help right in the terminal when I need it

- Custom worktree management scripts I built with Claude code

- Claude code and Codex web apps for side tightly scoped background tasks

Test cases

I tested with two real tasks for my website:

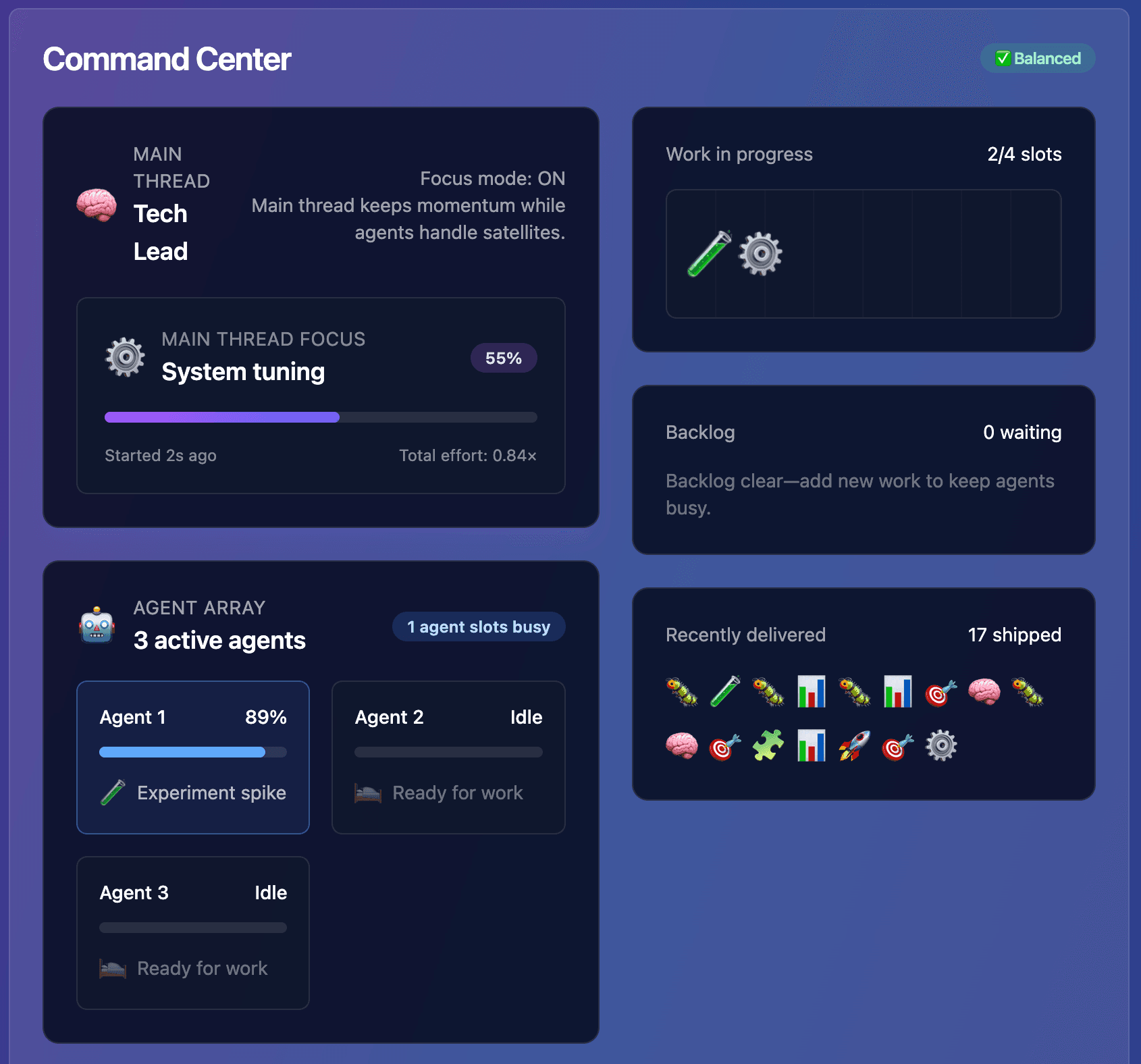

- Creating a new interactive resource to demonstrate the limits and challenges of managing multiple development agents

- Building new pages and CTAs for augmented engineering cohort coaching classes I want to test interest in

What worked well

Managing multiple simultaneous agents was really easy to do and really accessible compared to some of the other approaches I've tried.

The ability to run multiple different types of agents in parallel is a new trick and something that Cursor 2.0 does that's really hard to do other ways. It's a nice tool to have in your belt.

Cursor Composer had a reputation as being really fast, and boy does it live up to that reputation. It is an incredibly fast model. It's a nice change of pace from Claude Sonnet 4.5, which I think is pretty fast, and GPT-5 Codex - which is fast - but compared to some of the other options is painfully slow.

However, while Sonnet 4.5 and GPT-5 codex both produced passable starts to the interactive resource, Composer failed completely. I gave it more than it could handle, and needed to stick with more defined tasks.

I've attached screenshots of each model's initial output at the bottom of the post if you're curious.

Managing worktrees is just done for you and you don't have to think about it, which is really awesome because managing worktrees is one of the more difficult things to wrap your head around in this workflow.

I did get quickly get some solid ideas for how to make the visuals work for my interactive resource much more quickly than I would have in my current terminal workflow.

What wasn't so great

Code review friction

Reviewing code seems still a little stuck in the previous Cursor paradigm. It was hard to switch between the various agent outputs and review.

The "Accept" vs. "Reject" approach to generated code just feels outdated now.

VS Code's source control panel handles review across multiple worktrees really well now, and Cursor does not.

I ended up resorting to submitting PRs vs. iterating locally. I think Codex and Claude code on the web have a better experience for this for pre-PR iteration.

Parallel implementation drawbacks

Multiple parallel implementations of the same task only makes sense in really targeted situations.

For example, one of my tasks called for a database migration, and each parallel agent made its own slightly different database migration. I quickly needed to eject and reset the task to avoid making a mess. I needed to get the foundation set first and then switch to multiple parallel implementations. Once I did that, the cohort class pages and CTAs came together fast - but that simple of a task didn't really benefit from multiple implementations.

Maybe over time I'll get better at choosing when to using parallel implementation, but I don't see myself using this approach often because it seems like you would mostly just burn through a lot more tokens for not much additional benefit.

I don't think I could have done too many of these on my $20/month flyer for this test.

Speed vs. Quality

Composer was really fast - it felt 2 to 3x faster than Sonnet, but the quality of the output isn't high enough by my standard. What I want is something as good as or better than Sonnet 4.5 or GPT-5 codex, but as fast as Composer.

I think we'll get there, but it's going to take time for the labs to figure it out economically.

Traditional IDE first vs. Terminal first

Lastly, I'm now finding myself just more comfortable in a terminal-based interface with a code window as a secondary view versus the other way around. That's a matter of personal preference, but I'm feeling like that's the better path for me. It's a weird place to be despite being more at home in an IDE most of my coding career.

Multiple agent workflow lessons so far

Multiple agent workflows are exhilarating but can be mentally exhausting to manage. It's really tempting to spin up too many tasks and end up getting less overall done vs. just going one task at a time. WIP limits exist for a reason, and they're still valuable here to keep things in check, even if we might raise the limits slightly in some areas given these new tools.

It pays to be intentional about when to go single threaded vs. multi threaded. Some things need careful sequencing to avoid making a mess.

My best recommendation - stick with a single "main thread" task where you are actively pairing, and if under a WIP limit, spin off smaller, more tightly scoped side tasks that are as closely related as possible to your main task to limit just how much context switching is happening.

Multiple agents in parallel on the same task is great for when the right design is unclear, but the foundational elements are solid.

General workflow recommendations

Work where you're comfortable, but if you can get comfortable in the terminal, it's where you'll have the most power right now.

Be intentional about when and how you apply multi agent workflows - don't be afraid to dig into worktrees and have agents help you script the best workflow for you to use and potentially share with your team.

Consider faster models like Composer or Claude 4.5 Haiku on your "main thread" for maintaining flow state with less context switching once implementation plans are firmed up.

Next things to explore

-

I'm going to check back in a month or three to see how Cursor improves this paradigm. I don't think they're a team to be underestimated.

-

I want to try running Composer via cursor's CLI for more defined tasks, and do a bake off against 4.5 Haiku

-

Dig further into speckit as a means of putting more consistent structure around planning and scoping of individual tasks.

-

Droid is getting a lot of buzz as a multi model terminal agent, give that a similar workout in an upcoming "can you earn my $200/month daily driver" evaluation.

What approaches are you using to conduct your symphony of agents? Reach out and let me know or shoot me a message on linkedin - I'm testing new workflows actively and always looking for ideas.

Parallel Implementation Screenshots

Get More Like This

Follow along as I build and share what I learn

Found this helpful? Share it with your network!